Versatile C++ game scraper: Skyscraper

-

@Clyde Luckily I have the code in the release so I didn't loose anything. But it makes my eyes twitch to think about this even being possible. How can I trust Github to not do this again? If it is in fact their fault of course. Maybe I did something in my sleep on one of my other developer machines that would result in this... I just can't think of how.

@mitu Yeah, good catch, I hadn't seen that. I have no idea how it would get detached like that. I don't have the code on my current developer machine and

git pulldoesn't give it to me either. I'll have to check the other developer machines in a couple of days when I can get to them. -

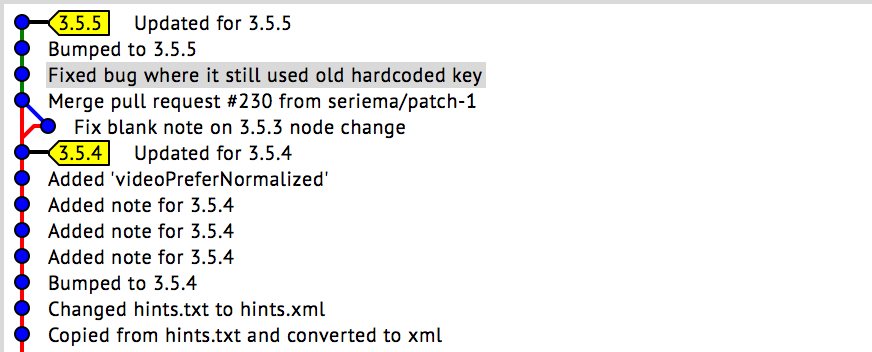

@muldjord I cloned the repo and the 'missing' commits show up (screenshot from gitk):

My guess you checked out 4.3.4 and then added the subsequent commits without a branch ? My git-fu is not so strong.

-

@mitu I'll have to check the bash history on those other machines. It might shed some light on this. I really hope this is my own fault.

-

@mitu , @Clyde Just a quick update on my little problem. I think I know what has happened. I probably made the 3.5.5 release on a different machine and forgot to push the local commits to master before tagging and pushing the tag to origin. So the code change from 3.5.4 to 3.5.5 was never pushed to master, but the tag was. I had no idea you could even do that, and I guess it's a testament to how well I actually remember to commit the changes since this has never happened to me before. I must've been tired.

I am really happy this was an error on my part. I was worried this was some weird mirror-caching Github error. It is not. Phew. :)

Guess who'll never forget to push local commits to master before tagging again. Ah, who am I kidding, I'll probably forget it again next week. :D

-

@muldjord Maybe there's a way to automate the push the commits before pushing the tags, or any other method to keep both machines on-par? (I don't know enough bash-fu for more than this suggestion.)

-

I'll get to the other machine tomorrow and push the commits to master. I actually think that will fix it as the tag seems to be bound to a commit, which just happens to not be glued to master yet. So if that's the case it's not a big deal if I forget in the future.

-

Hi,

Thanks for making this scraper; it is really nice to use it and looks better than the one that is in emulationstation.

I have a question regarding the script used in retropie: I don't see a parameter to use the scraper only for the roms that hasn't been parsed yet. This result in downloading the same games over and over , even if a different source scraped that game.

Is this a limitation of the existing script for retropie or it is not even an option, and require you to run the scraper by hand via terminal?I wish there was an aggregate function that pars all sources in sequence, only for roms that were not identified; so you can leave it churning overninght :) Thanks !

-

@raspnoobie There is, but it's not implemented in the script yet. It's a command-line flag

--flags onlymissing. It will make sure only games that have no data cached for them (from any source) are scraped with any source you choose. Be aware that if a game has even a single resource cached by any source, it will then be skipped. If instead you want to scrape only games that are missing a certain resource, you can use the--cache reportoption as documented here -

Hello @muldjord, I recently ran into a problem on my new Pi 4 while starting to include Playstation 1 games into my Retropie setup.

While Skyscraper does scrape covers, e.g. from Screenscraper & TheGamesDB, and they are saved in the covers cache directory, they won't be copied to

../psx/media/coversand thus, they're not used as Runcommand loading screens.Do I miss something? Any help is appreciated.

-

-

Thanks for the quick answer, though I'll admit that I'm a little over my head here. As far as I understand the artwork docs, a single Output node of the type "cover" placed inside of the

<artwork>node should suffice:<output type="cover"> <output>All other attributes of the Output node are marked as optional in the docs, but I still get no covers in the media directory.

Is my node wrong? Do I have to re-scrape? … ?

-

@Clyde Your output nodes are mismatched. It has to be:

<output type="cover"/>Place it just before the

</artwork>node. Xml nodes always come with a begin and an end. If there's no data nested in the node it can be escaped with a/at the end as seen above. It is equivalent to:<output type="cover"></output>You do not have to rescrape, the data is already cached (as long as you didn't disable the cover scraping when you scraped the files to begin with). Just regenerate the gamelist after making the change. That's the power of Skyscraper. Make a change, regenerate, and you're done!

-

Thank you very much. It's working now. 😀👍

-

@Clyde Awesome! :)

-

@muldjord One last question: Why are covers not exported by default? My first error was to expect that, because I was used to it from other scrapers. 😳

-

@Clyde EmulationStation does not have a

<cover>node so it would just be non-used media files taking up space (unless like in your case, where you use them with the runcommand). Only recently did I decide to make it populate the<thumbnail>ES node with the cover. But I won't make it default, as the cover is not a thumbnail. But it's a hack to allow users to export a thumbnail by creating a<output type="cover">node. You will notice that your gamelist now has a<thumbnail>node for each game that is now populated with the cover. It is only used when browsing in grid mode (unless you use a theme that makes use of it otherwise), and is quite a resource hog to be honest. -

Again, thanks for the explanation. I actually did notice the new

<thumbnail>nodes in thegamelist.xml. 😊 -

@muldjord Thanks for the clarification; I think I can add the flag to the script, if that is the only thing that is needed. I have been parsing the same games multiple times but ended up with the same 10 games that can't be found :) That's why I was trying to not parse again the ones already parsed

-

Based on my new knowledge about covers in Skyscraper, I made a small script to hardlink all covers in

media/coversto their...-launchingcounterparts inimagesas Runcommand launching images (see the RetroPie Docs for more about that). edit: Hardlinks save space by being just additional directory entries for the same data blocks. Other than soft links, they remain valid after the original entries were deleted. If you want real copies, just change thelncommand tocp.I'll share it here for anyone who wants an easy way to use Skyscraper's covers as launching images. Everything behind a

#is merely a comment.system=$1 # get the system to process (e.g. snes, psx, …) cd /home/pi/RetroPie/roms # go to the roms directory mkdir -p $system/images # create "images" subdir in system dir if not already present for f in $system/media/covers/* # process all cover images do # get the filename without its path fb=$(basename "$f") # hardlink cover.ext to cover-launching.ext ln "$f" $system/images/"${fb%.*}-launching.${fb##*.}" # explanation of the bash-fu: # ${fb%.*} = gets the filename without the last extension # ${fb##*.} = gets only the extension done cd - > /dev/null # return to the original directory quietlyIdeally, put the script in

/home/pi/binwhere it will be found automatically from any other directory and give it a meaningful name, e.g.covers2images. Make it executable with the commandchmod u+x /home/pi/bin/covers2images. This way, you can invoke it from any other directory by that name followed by the system to process, e.g.covers2images arcade. It will return to that directory after completion.The same script in one line for one-time use without a script file:

system=arcade; cd /home/pi/RetroPie/roms; mkdir -p $system/images;for f in $system/media/covers/*; do fb=$(basename "$f"); ln "$f" $system/images/"${fb%.*}-launching.${fb##*.}"; done; cd - > /dev/nullThe one-liner will also work from any other dir and return to it afterwards. The only difference is that you'll have to change the system's name after

system=manually before running it.Sharing is caring. ❤️ That said, I'm waiting now for someone who tells me that this could be done much more easily in a different way, or that it is unnecessary for reasons™ alltogether. 😉 Anyway, it was a nice exercise to hone my bash-fu, and although I tested it thoroughly, I welcome any bug report or suggestions to improve it.

edit: launching, not loading 🥴

-

Im having a strange issue where some of my scraped DOS games are suddenly no longer scraped. I'll randomly find games that I had previously scraped successfully missing, play count, rating etc still is there but all media for that game is missing. When I try to regenerate the gameslist it's like the media files are missing from the cache too. I can't figure out what I'm doing thats causing this as I'm not touching these files at all once they are scraped.

Edit: so i just tried rescraping my "pc" folder and many games that were already scraped and in the cache are getting "game not found" messages.

Contributions to the project are always appreciated, so if you would like to support us with a donation you can do so here.

Hosting provided by Mythic-Beasts. See the Hosting Information page for more information.