Better than CRT quality?

-

I pose a question to you: what if Sony had continued to produce professional CRT's where would we be now?

The direction Sony seemed to be heading in was going for sharper images with as little warping in the screen geometry as possible.

This seems to me to be the exact things LCD/OLED screens are great at: perfect geometry and razor sharp images. The only thing that really lets them down is their brightness which maybe is changing, but we'll get back to that later.

Ok I'm going to say now that I have a large Sony PVM (with a MiSTer - space saving - and original controllers) which I love but there are numerous issues primarily vertical arcade games and a large recapping job to hopefully fix some relatively minor divergence issues - CRT's are sadly ageing and although I love it, maybe I need an alternative as backup - especially while I recap my PVM!

So how might we get "a better than CRT" image on a LCD/OLED screen without using all those high cost, blurry, moire pattern ridden shaders that come with Retroarch/libretro? That's a bit harsh I know some of them are technically excellent - I'm just not a fan of them visually.

Well let's set out one definite feature of a CRT we want to emulate and that's the scanlines! I believe these are fundamental to the retro gaming look.

We also want to aim for crisp pixels and no moire patterns so we always need integer scaling. Because of this we'll probably want to overscan the image and chop off a few pixels in usually the vertical axis. The current standard for this on a raspberry pi is probably a 5x multiplier for a 1080p screen.

Ok so far so good now on to the all the important scanlines. I want whole pixel scanlines interleaved with pure black. As far as I've been able to find none of the built in shaders seem to provide this. I find this a bit strange as its so simple to do and computationally inexpensive.

Never mind though I just built one that figures out what integer scaling has been applied and then uses that to just draw a whole number of pixels width scanlines:

#pragma parameter SCANLINE_WIDTH "Scanline Width" 2.0 0.0 8.0 1.0 #pragma parameter SCREEN_WIDTH "Screen Width" 1920.0 0.0 7680.0 1.0 #pragma parameter SCREEN_HEIGHT "Screen Height" 1080.0 0.0 4320.0 1.0 #ifdef GL_ES #define COMPAT_PRECISION mediump precision mediump float; #else #define COMPAT_PRECISION #endif #ifdef PARAMETER_UNIFORM uniform COMPAT_PRECISION float SCANLINE_WIDTH; uniform COMPAT_PRECISION float SCREEN_WIDTH; uniform COMPAT_PRECISION float SCREEN_HEIGHT; #else #define SCANLINE_WIDTH 2.0 #define SCREEN_WIDTH 1920.0 #define SCREEN_HEIGHT 1080.0 #endif // GLSL shader autogenerated by cg2glsl.py. #if defined(VERTEX) #if __VERSION__ >= 130 #define COMPAT_VARYING out #define COMPAT_ATTRIBUTE in #define COMPAT_TEXTURE texture #else #define COMPAT_VARYING varying #define COMPAT_ATTRIBUTE attribute #define COMPAT_TEXTURE texture2D #endif COMPAT_VARYING float _frame_rotation; COMPAT_VARYING vec4 _color1; struct output_dummy { vec4 _color1; }; struct input_dummy { vec2 _video_size; vec2 _texture_size; vec2 _output_dummy_size; float _frame_count; float _frame_direction; float _frame_rotation; }; vec4 _oPosition1; vec4 _r0005; COMPAT_ATTRIBUTE vec4 VertexCoord; COMPAT_ATTRIBUTE vec4 COLOR; COMPAT_ATTRIBUTE vec4 TexCoord; COMPAT_VARYING vec4 COL0; COMPAT_VARYING vec4 TEX0; COMPAT_VARYING float Scale; uniform mat4 MVPMatrix; uniform COMPAT_PRECISION int FrameDirection; uniform COMPAT_PRECISION int FrameCount; uniform COMPAT_PRECISION vec2 OutputSize; uniform COMPAT_PRECISION vec2 TextureSize; uniform COMPAT_PRECISION vec2 InputSize; void main() { vec4 _oColor; vec2 _otexCoord; _r0005 = VertexCoord.x*MVPMatrix[0]; _r0005 = _r0005 + VertexCoord.y*MVPMatrix[1]; _r0005 = _r0005 + VertexCoord.z*MVPMatrix[2]; _r0005 = _r0005 + VertexCoord.w*MVPMatrix[3]; _oPosition1 = _r0005; _oColor = COLOR; _otexCoord = TexCoord.xy; gl_Position = _r0005; COL0 = COLOR; TEX0.xy = TexCoord.xy; vec2 ScreenSize = max(OutputSize, vec2(SCREEN_WIDTH, SCREEN_HEIGHT)); if((InputSize.x > ScreenSize.x) || (InputSize.y > ScreenSize.y)) { Scale = 1.0; } else { float ScaleFactor = 2.0; while(((InputSize.x * ScaleFactor) <= ScreenSize.x) && ((InputSize.y * ScaleFactor) <= ScreenSize.y)) { ScaleFactor += 1.0; } Scale = ScaleFactor - 1.0; } } #elif defined(FRAGMENT) #if __VERSION__ >= 130 #define COMPAT_VARYING in #define COMPAT_TEXTURE texture out vec4 FragColor; #else #define COMPAT_VARYING varying #define FragColor gl_FragColor #define COMPAT_TEXTURE texture2D #endif COMPAT_VARYING float _frame_rotation; COMPAT_VARYING vec4 _color; struct output_dummy { vec4 _color; }; struct input_dummy { vec2 _video_size; vec2 _texture_size; vec2 _output_dummy_size; float _frame_count; float _frame_direction; float _frame_rotation; }; uniform sampler2D Texture; COMPAT_VARYING vec4 TEX0; COMPAT_VARYING float Scale; uniform COMPAT_PRECISION int FrameDirection; uniform COMPAT_PRECISION int FrameCount; uniform COMPAT_PRECISION vec2 OutputSize; uniform COMPAT_PRECISION vec2 TextureSize; uniform COMPAT_PRECISION vec2 InputSize; float mod_integer(float a, float b) { float m = a - floor((a + 0.5) / b) * b; return floor(m + 0.5); } void main() { output_dummy _OUT; vec2 InPixels = (TEX0.xy * TextureSize) * vec2(Scale); if(mod_integer(floor(InPixels.y), Scale) < SCANLINE_WIDTH) { _OUT._color = COMPAT_TEXTURE(Texture, TEX0.xy); } else { _OUT._color = vec4(0.0,0.0,0.0,1.0); } FragColor = _OUT._color; return; } #endifSimple and very cheap! Great!

If you have a different output resolution for your rapsberry pi you'll need to set that up in the shader parameters once you've applied this shader.

This brings up a thorny issue - that of screen resolution. Retropie as far as I can tell can only really output a 1080p image - 4K is technically possible but the refresh rate takes a hit as the underlying hardware is just not powerful enough or hasn't been optimised for. As such if you are using a 4K screen then your monitor/tv will use its internal upscaler to change the rapsberry pi's 1080p output to a 4K image. This will almost always use some sort of bilinear/trilinear etc filter and what we want is a nearest neighbour filter. Sadly the only monitor I can really find that does this is the brand new Eve Spectrum monitor and I haven't received my one yet to prove this out.

Long and short if you are using a 4K screen you will get slight blurring and there's very little you can do about it. What I will say is that at the distance you need to sit to make the scanline illusion work and the resolution of a 4K screen you may find it difficult to see the slight blur.

A mention on seating distance: the above shader will not look that good if you are sat on top of a 50inch screen you'll need to sit at least 2-3m away from it to get the scanline feel. As the screen gets smaller the closer you can sit.

Ok with all that out of the way what's left? Brightness!

The major problem up until recently with LCD/OLED screens is the lack of brightness compared to a CRT when you have scanlines on an LCD based screen. This makes sense as with the above shader, a 2 pixel scanline width and a 5x integer scaling; 60% of your screen is black! What we need to do, is make up for that by turning up the brightness of the TV. However even at max brightness settings most old SDR LCD/OLEDs aren't powerful enough to make up the difference. Even when the brightness is up then you start to get light bleed into the black pixels in between the scanlines as on a 1080p screen they are 3 pixels wide and even with back lit LED's those LED's will cover those pixels.

Hopefully high end 4K TV's from say Samsung have enough local dimming control and enough pixels to help with this and have enough brightness to make up for the black pixels in between. I'd love to know what people's experience is - please comment below! Once I have the Eve Spectrum monitor I should be able to experiment with a DisplayHDR 600 capable monitor which maybe is enough and may provide the "better than CRT" experience I'm after.

As for OLED's maybe they are not bright enough? Again I'd like to know. For the future MicroLED screens like Samsung's "The Wall" seem to be the perfect solution when they become cheap enough.

Having a high brightness scanlines with pitch black pixels in between should hopefully replicate the bloom effect you get on a CRT screen i.e where the scanlines appear wider in bright areas and thinner in dark areas. Again I'd like to hear peoples experiences.

Anyway I've probably said enough for now. I know CRT's are king I'm not saying otherwise just proposing an alternative way forward. Eve's Spectrum monitor maybe the solution to all of this I'm yet to find out.

I'll post a setup guide for the above shader and settings below. Sadly there are still bugs in Retropie 4.7.1/EmulaitonStation/Retroarch/libRetro etc that are causing problems but I'll describe those below.

Thanks!

-

Setup

Download and install WinSCP.Create two text files, one containing the shader code copied from above in a file called integer_scanlines.glsl and the other in a file called integer_scanlines.glslp containing this:

shaders = "1" shader0 = "shaders/integer_scanlines.glsl" filter_linear0 = "false" wrap_mode0 = "clamp_to_border" mipmap_input0 = "false" alias0 = "" float_framebuffer0 = "false" srgb_framebuffer0 = "false"Boot Retropie and find out its IP address via the menu Retropie->Show IP.

Open up WinSCP and Session->New Session->New Site

File Protocol: SFTP, Host Name: IP address you just found, Port No.: 22, User Name: pi Password: raspberry

LoginCopy the two files you just created to: /opt/retropie/configs/all/retroarch/shaders

Then move integer_scanlines.glsl to /opt/retropie/configs/all/retroarch/shaders/shadersNow open up /opt/retropie/configs/all/retroarch.cfg and change:

video_scale = 3.0 video_scale_integer = true video_force_aspect = true aspect_ratio_index = 21 video_shader = "/opt/retropie/emulators/retroarch/shader/integer_scanlines.glslp" video_shader_enable = trueOptionally you may need:

video_windowed_fullscreen = true video_fullscreen = true video_fullscreen_x = 1024 video_fullscreen_y = 768Save and reboot your Raspberry Pi.

This should set mostly everything up and you wont need WinSCP anymore.

However you may want to setup custom viewports, change the scanline widths and if you have a different output screen resolution than 1080p then you can change this too in the shader parameters.

Custom Viewport

Enter game then enter the Retroarch menu which on my Raspberry Pi is left hand side button and middle top row button together yours may differ.

Then back up to Main Menu->Settings->Video->Scaling then there seems to a bug in this menu where you have to switch Integer Scale off then back on again then go down to Aspect Ratio and push Right twice to see ‘Custom’ and choose Custom Aspect Ratio Height and Width of 5x (or whatever you want). Then go to Main Menu->Quick Menu->Overrides->Save Game Overrides to save the custom viewport for the game.Different Width Scanlines

Go to Main Menu->Quick Menu->Shaders->Shader Parameters and change the Scanline Width to what you want. Then go to Main Menu->Quick Menu->Shaders->Save and Save Game Preset to save this setting for the game.

Custom Screen Resolution

If you are running this with a non 1080p output resolution then go to Main Menu->Quick Menu->Shaders->Shader Parameters and change the Screen Width/Height to what your monitor/tv’s native resolution is. Then go to Main Menu->Quick Menu->Shaders->Save and Save Global Preset.

Mame2003FBAlpha2012 and Vertical Arcades BugI’ve found there is a bug with

Mame2003FBAlpha2012, vertical arcade games, integer scaling and custom viewports. Basically it tells Retroarch/EmulationStation the wrong width and height when rotated i.e you're viewing a game on a landscape oriented screen. This results in an incorrect aspect ratio and breaks this shader badly but it also breaks all other shaders and its just wrong. FBNeo does not suffer from this problem so use that for vertical arcade games when you can. I’m trying to figure out a work around/what fixes it although I've yet to find one other than setting a non-integer scaling and explicitly setting the width and height to an integer scaling. This sadly breaks the above shader though as it still gets passed in the wrong width and height. I've seen arguments about this in various forums but this is just broken from the user's perspective as it breaks the aspect ratio and therefore any shaders.[EDIT] I made a mistake in thinking it was the Mame2003 core that had the bug, instead it was the FBAlpha2012 core. As this core has been largely superceded by FBNeo there's probably little to do other than swap over to using that more up to date core.

-

@fishermanbill said in Better than CRT quality?:

So how might we get "a better than CRT" image on a LCD/OLED screen without using all those high cost, blurry, moire pattern ridden shaders that come with Retroarch/libretro? That's a bit harsh I know some of them are technically excellent - I'm just not a fan of them visually.

moire patterns are resolved if you run at a high enough resolution (more pixels per scanline), and also can be virtually invisible on a 1080p display IF it is configured correctly (ie, running it in full panel resolution - https://retropie.org.uk/docs/Overscan/#my-image-is-cut-off). it's tricky with vertical games as they use such a small portion of the screen, so even less pixel density to work with, but check out https://retropie.org.uk/forum/topic/4046/crt-pi-shader-users-reduce-scaling-artifacts-with-these-configs-in-lr-mame2003-lr-fbalpha-lr-nestopia-and-more-to-come

Well let's set out one definite feature of a CRT we want to emulate and that's the scanlines! I believe these are fundamental to the retro gaming look.

We also want to aim for crisp pixels and no moire patterns so we always need integer scaling.i don't neccesarily agree with this because many systems/games did not use 1:1 PAR. eg, if you use integer scaling with Street Fighter II (arcade) it will be in a widescreen type mode, making all the characters 'fat'. but yeah it can give good results for sure, if you're careful with games that don't have 1:1 PAR.

This brings up a thorny issue - that of screen resolution. Retropie as far as I can tell can only really output a 1080p image - 4K is technically possible but the refresh rate takes a hit as the underlying hardware is just not powerful enough or hasn't been optimised for

you can run retropie on an x86 pc, or faster SBC, etc.

This will almost always use some sort of bilinear/trilinear etc filter

not sure what you mean by this? a 4k screen should render a 1080p image crisply with no scaling artefacts, since 1920x1080 (1080p) x 2 is 3840x2160 (4k) - again you will need to ensure you're on the right display mode to ensure it's using every pixel of the panel.

I’ve found there is a bug with Mame2003, vertical arcade games, integer scaling and custom viewports. Basically it tells Retroarch/EmulationStation the wrong width and height when rotated i.e you're viewing a game on a landscape oriented screen. This results in an incorrect aspect ratio and breaks this shader badly but it also breaks all other shaders and its just wrong.

i'm not quite sure what you mean by this. i have some experience with vertical games + shaders + mame2003 but i'm not aware of this bug.

(sorry for the pedantry and avoiding the substance of the post, just aspect ratios are my passion, lol.-the shader/discussion is cool!)

-

Thanks for your reply! I suppose I should have made clear in my original post that I did tailor it to RetroPie as in outputting 1080p to various screens as I think the RetroPie is an ideal device for retro gaming largely because of its size. Sure you can use a PC to directly output to different screen resolutions but I probably would have posted to the LibRetro forum for that type of stuff. In any case the approach I want to use here still has the same problems of screen brightness on PC.

So as for moire patterns ok you may not percieve them in certain scenarios but the underlying problem still exists of uneven or blurry scanlines and as long as that exists you have the potential to witness moire patterns.

Of course all the standard shaders of libretro deal with the problem by either having uneven scanlines or just blurring them. Thats fine if you like that sort of thing I personally dont and as you touch on, the problem gets worse when you go down to lower res screens as in Pimoroni's Picade with its 1024x768 screen. Also seeing all the screen on CRT's was never a thing - there was always some part of the screen chopped off - so in a way using an 'overscan' resolution is more accurate but yes integer overscaning does cut off more of the screen than most TV's back then.

Essentially I want to solve this problem by instead using integer scaling and precise pixel scanlines and by default libRetro (and therefore eveything based on it) doesn't really provide an option out of the box for this approach - at least not a shader. Also its custom viewport support seems buggy - at least in RetroPie's 4.7.1 (I'll come back to this below).

As for Street Fighter II yes I'm aware of the fat character problem and the reason for 1:1 PAR. So yes in the purist approach of my post you would have to accept fat characters. However as you know aspect ratio is a ratio between the width and height and so you can still have integer scaling in the all important y axis whilst maintaining crisp scanlines and then have a non integer scaling in the x axis to have a PAR aspect ratio and make the characters look 'normal' or whatever that was for the screen you were looking at it on. Sadly I'm not sure Retroarchs interface (or supported interface at least) makes that easy to do - it is possible though.

"a 4k screen should render a 1080p image crisply with no scaling artefacts, since 1920x1080 (1080p) x 2 is 3840x2160 (4k)"

You would expect that but no TV/monitor on the market will do that - if you input a 1080p signal it will use the bilinear/trilinear/smart etc blur filter ('smart' being an adaptive blur filter) to upscale it to 4K not a nearest neighbour filter. This is because for normal footage (its arguable) thats better but for pixel art its not. Some Panasonic screens I believe had a 'game mode' that would directly scale it as you say i.e 1 pixel upscaled directly to a 2x2 pixel quad. However I believe those models were discontinued. Eve's Spectrum is the first monitor on the market to provide an integer upscale.

Let me get some more information for you on mame2003 + vertical arcade + custom viewport + integer scaling bug. It maybe an out of date Retroarch/mame2003 or I maybe am using a different core to what I thought. Its definitely a bug on the version of RetroPie I have - I'll take some screen shots to show you.

-

@fishermanbill said in Better than CRT quality?:

Thanks for your reply! I suppose I should have made clear in my original post that I did tailor it to RetroPie as in outputting 1080p to various screens as I think the RetroPie is an ideal device for retro gaming largely because of its size.

what i mean is 'retropie' does not mean 'raspberry pi' - it's an emulator setup script that can be installed on many devices, including PC. i think you mean 'retropie on raspberry pi' :) (pedantic i know...)

Of course all the standard shaders of libretro deal with the problem by either having uneven scanlines or just blurring them. Thats fine if you like that sort of thing I personally dont and as you touch on, the problem gets worse when you go down to lower res screens as in Pimoroni's Picade with its 1024x768 screen.

the general rule is that a scanline shader needs 4 vertical rows of pixels for every scanline. on a typical 320x240 game that would be 240*4=960, so a 1080p screen should generally give you good results with no obvious blurring, but 768 will be a very bad time and demand integer scaling (and the ensuing borders/overscan).

but... if you use an integer scale on those scanline shaders there should be no blurring.

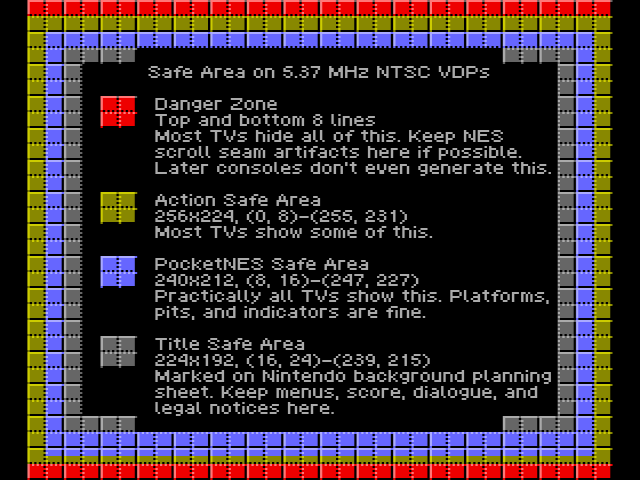

Also seeing all the screen on CRT's was never a thing - there was always some part of the screen chopped off - so in a way using an 'overscan' resolution is more accurate but yes integer overscaning does cut off more of the screen than most TV's back then.

for home consoles this is true, but there is a certain amount of overscan that is expected for such systems - there is normally no game-critical information rendered in those portions, as you can't guarantee that a given home CRT is going to display it. conversely, some games actually hid necessary garbage in those areas on the presumption that 99% of sets wouldn't show it - eg mario 3's extreme horizontal edges.

so, yes having some overscan is accurate for home consoles, but only within those specific screen regions.

...but arcade machines are different. these were not consumer CRTs and arcade machines where configured by technicians/arcade owners per-game. these games regularly use the whole of the 240p (or whatever) image. for example, see how the score is right at the top of the screen, and the bombs right at the bottom in donpachi:

However as you know aspect ratio is a ratio between the width and height and so you can still have integer scaling in the all important y axis whilst maintaining crisp scanlines and then have a non integer scaling in the x axis to have a PAR aspect ratio and make the characters look 'normal' or whatever that was for the screen you were looking at it on. Sadly I'm not sure Retroarchs interface (or supported interface at least) makes that easy to do - it is possible though.

yeah, you can do this, although that will inevitably cause borders/overscan depending on whether you want to run under/over. i did actually experiment with this approach using the scripts i linked previously, but i found it not worth it.

but it does require some calculations. in the SF2 example, if you use integer scaling on Y, and leave X at non-integer, you're still going to end up distorting the image unless you adjust X, because the aspect ratio is going to change. what you'd need to do is adjust X accordingly. eg, for SF2 at 5x Y, that's 5x224=1120. the X is 1440 by default (ie, it's a 4:3 game so if Y is 1080, then X is 1440), so to maintain 4:3 for a 1120 Y we'd have to change X to 1493(.333). it won't automatically do this, but a script like the one i linked before could do these calculations for you, with adjustment.

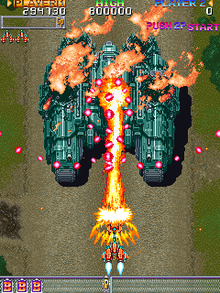

also, you can do this manually do this via the RGUI: when you select custom resolution it tells you when you're at an integer scale as you adjust the resolution (see "4x"):

but yeah you'd still need to adjust X as per a calculation.

"a 4k screen should render a 1080p image crisply with no scaling artefacts, since 1920x1080 (1080p) x 2 is 3840x2160 (4k)"*

You would expect that but no TV/monitor on the market will do that - if you input a 1080p signal it will use the bilinear/trilinear/smart etc blur filter ('smart' being an adaptive blur filter) to upscale it to 4K not a nearest neighbour filter. This is because for normal footage (its arguable) thats better but for pixel art its not.

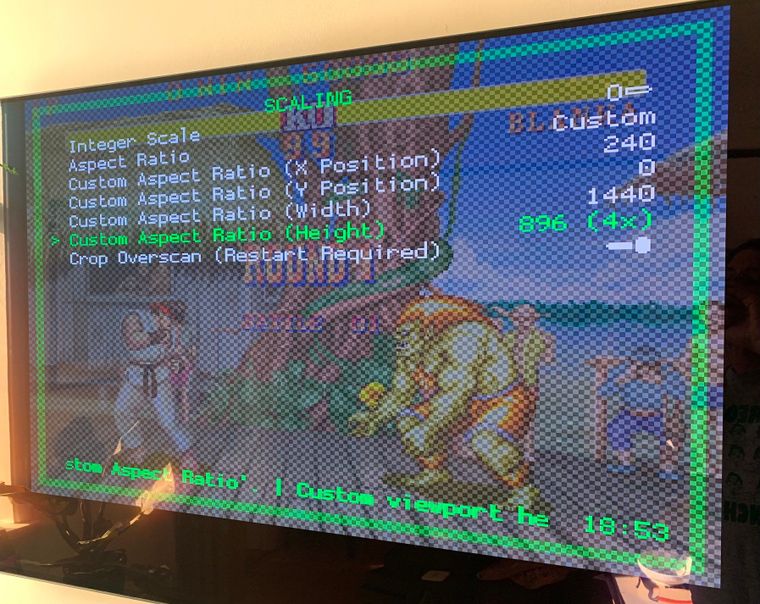

i have not experienced this with my 4k TV at 1080p - an LG OLED, but you do have to correctly configure it, as i've said. here's some proof - a close up shot of the console at 1080p:

the linux console, by default, has a font made up of lines 1-2 pixel 'thick'. there's no bilinear filtering or font smoothing. as you can see, this becomes 2x2 squares on my screen. forgive the light bleed - this is with some glare from the sun, but the light is only being emitted from those OLED diodes, with no scaling/smoothing beyond that basic 1x1=2x2 transformation.

i would be very surprised if this configuration is not possible for all major TV brands - we have instructions for Samsung, LG and Pioneer here: https://retropie.org.uk/docs/Overscan/#my-image-is-cut-off (please ignore that this is the overscan doc - this also fixes an overscan issue but what these tweaks effectively do is make sure an HD/4k source image is displayed 1:1 on HD/4k tvs).

-

@dankcushions

I'm pretty sure your big image of the 'P' is showing exactly what I'm saying i.e its not mapping one pixel directly to a 2x2 pixel quad with no adjustments. As in the 'P' is surrounded by a one pixel pattern - that wouldn't be possible on a pure nearest neighbour upscale right? You can argue its light bleed but then you have to ask how the TV knows it displaying pixel artwork and shouldnt be upscaling it with a blur filter as all other media would want that including high end consoles. If its guessing then its going to get it wrong at some point.I'll have a look at your scripts as it may help to automate things for me - thanks!

So in my opinion 4 or 5 pixels is not enough to provide a decent representation of a scanline surrounded by black inlcuding a faux bloom effect. As in you now have only two or three pixels to contain the blurred edge. Its just too low a resolution for what people want to do and this really gets to the heart of my original post: why bother blurring the edge of the scanlines to mimic the high brightness of a CRT when we can probably now just use the HDR brightness of modern monitor/TV sets to actually create a real bloom ourselves.

As for the 5x overscan approach this is pretty much the standard on the MiSTer platform and what most people recommend over there for LCD's. In fact last year the lead developer added support for overscaning and integer scaling support like this to all the cores for this very reason. However its up to you whether you like this approach vs others there always swings and roundabouts with this.

I would have thought most people on the RetroPie forum would be using a RetroPie image on a Raspberry Pi but then again maybe I'm missing something and this is actually an EmulationStation on Raspberry Pi forum.

-

@fishermanbill

Yes thats definitely not light bleed on the 'P's one pixel pattern edge - you can see on the right hand side the one pixel pattern has a gap of dark sub pixels and then one light sub pixel that wouldn't happen if it was light bleed. They are also the same luminosity on both sides - left and right. Its difficult to say for certain but I'd guess those pixels are being turned on by your TV. -

@fishermanbill i have to apologise - i tried it in native 4k and didn't have that light bleed (i should have trusted OLED tech better!), so clearly there was something up, but i found the setting - super resolution - it adds detail to sub-4k images - ie antialiasing. this was enabled by default, even in game mode - by turning it off i have pixel perfect fonts:

I would have thought most people on the RetroPie forum would be using a RetroPie image on a Raspberry Pi but then again maybe I'm missing something and this is actually an EmulationStation on Raspberry Pi forum.

most people, yes, but i was just making the point that retropie on pc (or odroid or whatever) is still "retropie" :)

-

@dankcushions

No need to apologise at all, this is a learning process for all of us and I didn't know an option existed on the LG OLEDs for such a thing. Very interesting. So you couldn't do me a favour and see how good support it has for integer scaling? As in, is it only from 1080p to 4K that it does it? Also something else looks to be going on with the top of the 'y' and possibly the bottom of the back stroke of the 'y' - local dimming maybe (hmm actually no as its an OLED), just the effect of the camera? To be honest thats a minor nitpick if the image is being scaled correctly for pixel art with a nearest neighbour filter.I believe the Eve's Spectrum monitor alllows complete control of the image like RetroArch does in that it will allow you to take in a lower image resolutions (not just 1080p) and integer scale it up beyond the screen size and then move it around with an offset. However I've yet to see anybody who's got one show that functionality. Potentially this could offload work from the Pi to the monitor and allow the Pi to run cooler and or faster with overclocking. Mind you at that point you could argue just to get a more powerful computer than a more costly monitor but then its the image quality.

Going back to my original post and brightness I'm not sure an OLED is going to be bright enough to get up to high end CRT brightness levels. I think the LG OLED C1 has about ~350cd/m2 and I think we'd need to be double that to get a nice natural bloom effect - I could be wrong. Possibly the Samsung QLED TV's might be able to achieve it but then I'm not sure that the back light LED's are small enough not to cause light bleed.

-

@dankcushions said in Better than CRT quality?:

super resolution

Ah I've just looked into 'LG's Super Resolution' and its not a nearest neighbour upscaler. Although its done a good job of your test above, as in what we would like for pixel art, I don't think it would do the same job in all/majority of cases. I think as soon as you bring in colour and motion, it'll do all sort of things as its a 'smart' upscaler as I mentioned in my original post. It'd be interesting to see some tests to prove that theory out but the 'y' in the picture above starts to hint at that kind of thing going on.

-

@fishermanbill to be clear super resolution is turned OFF in my photo. it’s the upscaler that adds fidelity when upscaling from sub-4k, when in our case we don’t want that, so i turned it off. in the absence of that it appears to do a simple 1x1=2x2 transform.

-

Ah sorry missed read that - that makes more sense - ok good so it is doing nearest neighbour!

Contributions to the project are always appreciated, so if you would like to support us with a donation you can do so here.

Hosting provided by Mythic-Beasts. See the Hosting Information page for more information.